Another week down the year and so Sunday Rundown #91 is here! Unintentional rhyme but not to give you updates on the digital world would be a crime. You caught me in a mood today 😂

I almost can’t believe it, but Google Tasks is finally kind of good

Google has spent the last half-decade cruelly teasing me with its to-do list app. Google Tasks first launched as a standalone app in 2018, and Google seemed to have big plans for its task manager tool. And then… nothing. Actually, worse than nothing: Google launched and developed so many disconnected reminder-setting products that it became nearly impossible to figure out where your tasks were, what they were, and how you were supposed to get anything done.

But in the last couple of months, something miraculous happened: Google actually fixed it. The company spent this spring combining all its many Tasks and Reminders products into a single tool that is accessible almost everywhere and via almost any Google product. There are still some holes in the system and still a lot of task management features missing. But Google Tasks also has some unique upsides, and for the first time ever, I’m actually using it and enjoying it.

OpenAI launches customized instructions for ChatGPT

OpenAI just launched custom instructions for ChatGPT users, so they don’t have to write the same instruction prompts to the chatbot every time they interact with it — inputs like “Write the answer under 1,000 words” or “Keep the tone of response formal.”

The company said this feature lets you “share anything you’d like ChatGPT to consider in its response.” For example, a teacher can say they are teaching fourth-grade math or a developer can specify the code language they prefer when asking for suggestions. A person can also specify their family size, so ChatGPT can give responses about meals, grocery and vacation planning accordingly.

While users can already specify these things while chatting with the bot, custom instructions are helpful if users need to set the same context frequently. The instructions also work with plug-ins, making it easier for them to suggest restaurants or flights based on your location.

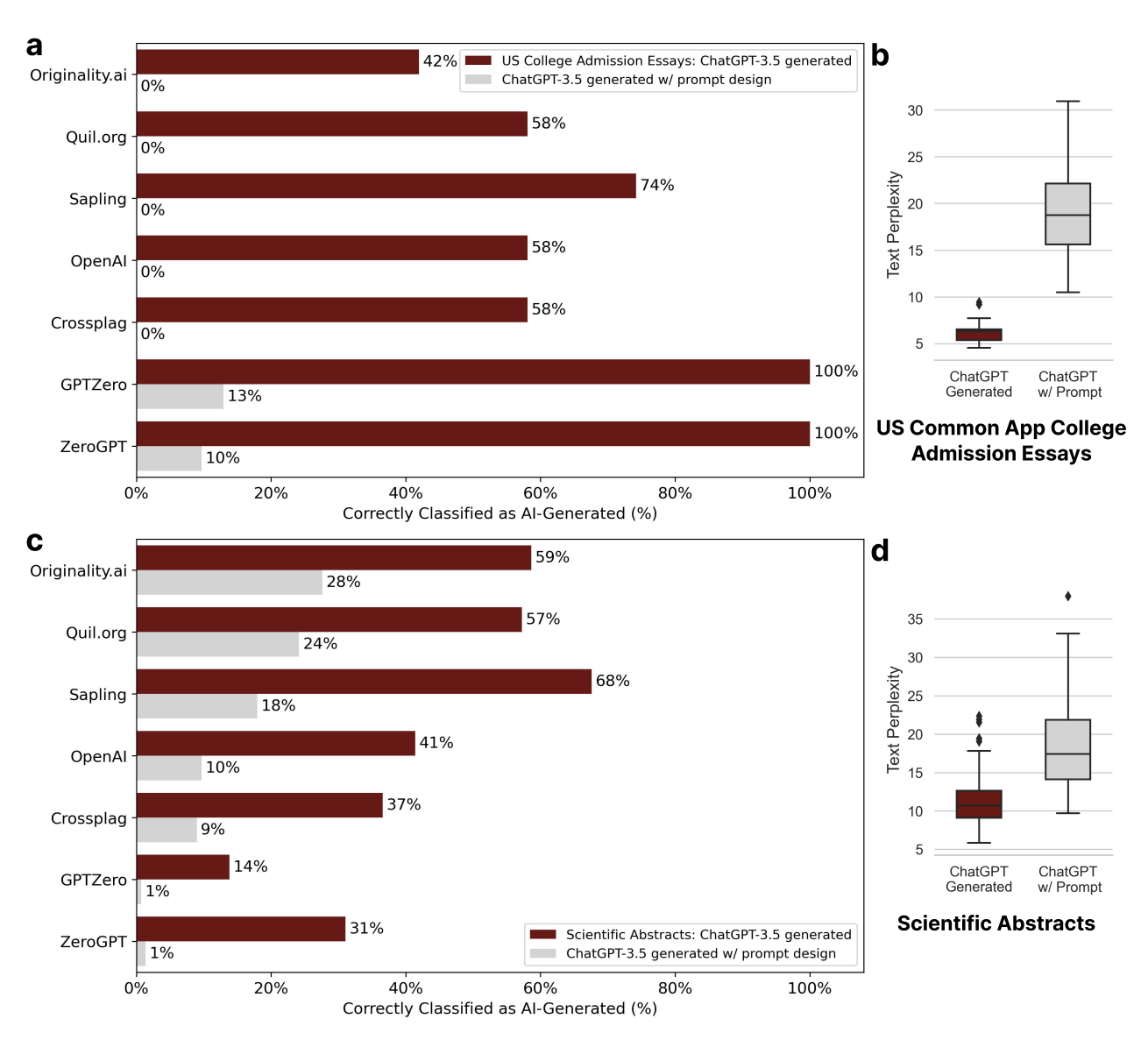

Should You Trust An AI Detector?

Generative AI is becoming the foundation of more content, leaving many questioning the reliability of their AI detector. In response, several studies have been conducted on the efficacy of AI detection tools to discern between human and AI-generated content.

Researchers uncovered that AI content detectors – those meant to detect content generated by GPT – might have a significant bias against non-native English writers. The study found that these detectors, designed to differentiate between AI and human-generated content, consistently misclassify non-native English writing samples as AI-generated while accurately identifying native English writing samples. Using writing samples from native and non-native English writers, researchers found that the detectors misclassified over half of the latter samples as AI-generated.

The findings suggest that GPT detectors may unintentionally penalize writers with constrained linguistic expressions, underscoring the need for increased focus on the fairness and robustness within these tools. That could have significant implications, particularly in evaluative or educational settings, where non-native English speakers may be inadvertently penalized or excluded from global discourse. It would otherwise lead to “unjust consequences and the risk of exacerbating existing biases.”

Bonus links

- Mastering GA4: How To Use The New Google Analytics Like A Pro

- 5 Ways to Create a Sustainable Multi-Location SEO Strategy [Infographic]

- Why would anyone make a website in 2023? Squarespace CEO Anthony Casalena has some ideas

- Are software companies good businesses?

- Amazon resurfaces ‘spend less’ messaging for back-to-school ads

Thank you for taking the time to read our Sunday Rundown #91. If you have a story that you want to see in this series, reply to us below or contact us.